Romain Dolbeau works as an HPC Expert at the Center of Excellence in Parallel Computing of Bull, an Atos company. In this role, he works with pre-sales and support teams to help Atos customers fully leverage the computing power and energy savings brought by many-core technologies.

Romain, tell us a bit about yourself.

I grew up in a Paris suburb where I discovered computing at a young age from a primary school substitute teacher. I later studied computer science at the Université Paris Sud, before moving to Rennes to work on a PhD in computer architecture under Prof. André Seznec at the ENS Cachan (now the ENS de Bretagne).

How did your career path lead you to where you are now? How did you move into HPC?

It was during my PhD work that I co-founded the compiler company CAPS entreprise with other students, research engineers and Prof. François Bodin. I joined CAPS as an employee, both as a system administrator and also as the computer architecture guy among the many compiler experts.

It was during this decade at CAPS that I really discovered HPC. The focus of the company quickly moved from general-purpose and embedded compilers to HPC, in particular when we introduced the first directives-based programming model for hybrid computing, HMPP, in 2007.

Most of the early adopters of the technologies (hybrid computing and HMPP) were large-scale HPC users, and we had to understand their needs to best serve them and improve our brand-new technology.

Ultimately, I became a pure HPC expert, working with our customers and partners to get the best out of their hardware and software investment.

So what does your job involve?

I joined Bull, now an Atos company, as an HPC Expert after the end of CAPS. As such, I again work with our customers, partners and other teams at Atos to fully leverage their hardware for HPC. There are different aspects to this, depending on the specific project.

Some clients are in need of training. They are experts in their own fields – which might be quantum physics, hydrodynamics, or climate prediction – but not necessarily in computer science. Therefore I need to train them to a varying degree of expertise in the fields that are relevant to HPC: hardware, compilers, parallelism, etc. Some might only need an entry-level course to avoid beginner’s mistakes, while others may apply for multiple days of intensive training in vectorisation, cache exploitation, and so on. The goal is for them to be able to create their new numerical codes in a way better suited to the underlying hardware, and to be able to do their science faster.

Other clients are in need of expertise or consulting work. The code is there, they are knowledgeable in the required subjects, but they want even more from their hardware. I work with them to study their codes, and adapt them to the current – or future – hardware. Sometimes minimal changes are required, sometimes entire parts of the code need to be updated to fit the machine. This requires up-to-date knowledge of the hardware and the software tools and can be quite challenging since some of the customers are already experts themselves.

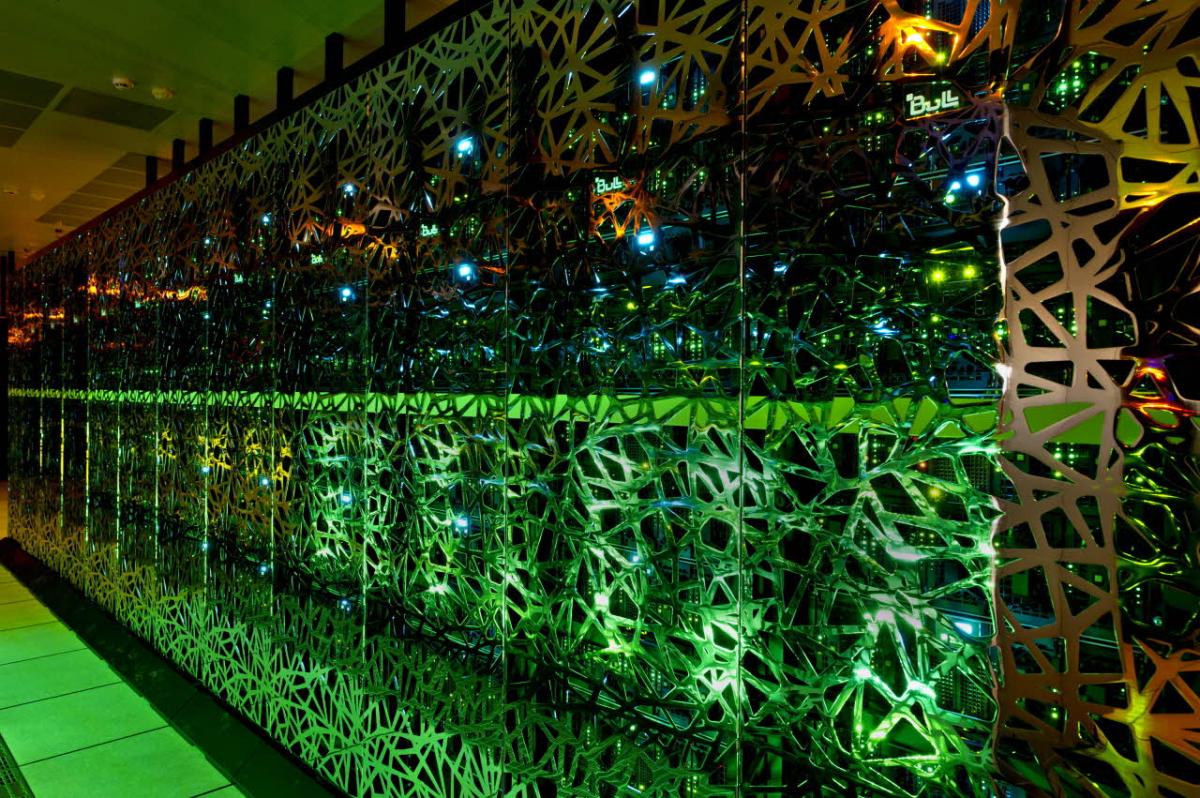

The Très Grand Centre de Calcul (TGCC), near Paris (left) and CURIE, a Bull supercomputer at the TGCC. Copyright: Cadam/CEA

What are the exciting aspects of working in an HPC-related career?

I need to constantly study the state of the art in my field to keep up with innovations and methodologies, so that I can have up-to-date answers for our customers. And since those customers come from every background, there is always something new to learn from them and new challenges born from different requirements.

Where do you see your career leading you next, and what is your outlook on the use of HPC in your field?

HPC has only become bigger and more relevant since I started in the field. It is not only numerical simulations which have become ubiquitous in every science and every industrial process, but also all the data-mining algorithms, deep-learning algorithms and so on from the social networking and internet-of-things era. All of them require massive amounts of computation.

So at least in the near future, I see my job as being broadly similar to what it is now. The technologies are constantly evolving, so that yesterday’s advice might be tomorrow’s mistake – or vice versa. Hybrid computing is common in the high-end now but was non-existent less than a decade ago. GPUs are ubiquitous, but alternatives, such as the Xeon Phi or PEZY-SC, are challenging them. The debate between proponents of many small cores and supporters of a few large cores is as intense at it ever was. Non-volatile memory is rising fast and threatening assumptions common in many user codes and perhaps even operating systems. Optical communication is shaking things up by moving from fibre optics to silicon photonics. The leadership of Intel in conventional CPUs is under threat by the manufacturers of mobile devices.

So even with the same job description, the ever-changing landscape of HPC is what keeps it interesting for me.